The Model Life-cycle: Training Module

PDF version of this training | All modeling training modules

The Model Life-Cycle

This module has three main objectives that will provide further insight into environmental modeling:

- Define the 'model life-cycle'

- Explore the stages of a model life-cycle

- Introductions to strategies for the development, evaluation, and application of models

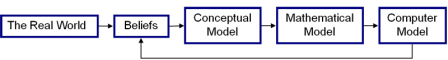

The life-cycle of a model![]() modelA simplification of reality that is constructed to gain insights into select attributes of a physical, biological, economic, or social system. A formal representation of the behavior of system processes, often in mathematical or statistical terms. The basis can also be physical or conceptual. includes identification of a problem and the subsequent development, evaluation, and application of the model.

modelA simplification of reality that is constructed to gain insights into select attributes of a physical, biological, economic, or social system. A formal representation of the behavior of system processes, often in mathematical or statistical terms. The basis can also be physical or conceptual. includes identification of a problem and the subsequent development, evaluation, and application of the model.

The Process Of Simplification:

Model development teams take scientific understanding – made from observations of a defined system – and simplify them to a level at which they can be acceptably represented by mathematical and statistical relationships, parameterizations, or physical replications. Formal definitions of a model reflect this process of simplification:

- A representation of our understanding of the world or system of interest (EPA, 2009a)

- A simplification of reality that is constructed to gain insights into select attributes of a particular physical, biological, economic, or social system (NRC, 2007)

Model Development Team:

Comprised of model developers, users (those who generate results and those who use the results), and decision makers; also referred to as the project team.

System:

A collection of objects or variables and the relations among them.

The Process of Simplification: translating our understandings or observations of a process into a conceptual or mathematical model. This model, can in turn, help to inform our understandings of the world around us.

Models are not 'truth-generating' machines. However, they seek to combine two approaches to truth – logical or mathematical truth and scientific truth. Although scientific truth may not necessarily exist, it represents our best understandings of the processes of interest.

Relationship Between Reality And Models

Due the complex nature of our surroundings (i.e. the 'real world' or the environment), it can be very difficult to fully describe the observed processes with mathematical interpretations. Models provide a simplification of those processes (and underlying mechanisms) that can then be used to provide information that is useful to a decision making process.

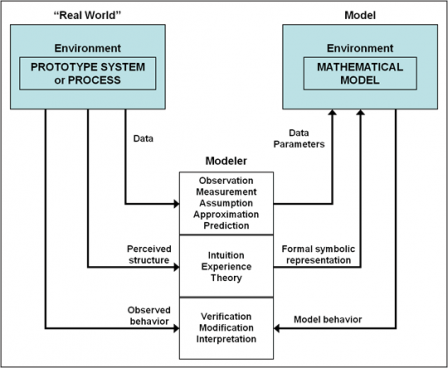

The relationship between the environment and model developers is shown in Figure 1; from Jacoby and Kowalik (1980). Model developers rely upon environmental data, observations, and perceived structure of a process or environmental system to construct a model. There are, and should be, distinctions made between the 'real world' and a model. Note that model behavior and observed behavior of the environment are both interpreted by the modeler.

Figure 1. Relationships between the environment, the model development team, and the model (Jacoby and Kowalik, 1980). In this sense, the model is separate from the 'real world' and only connected through the modeler's own understandings and assumptions.

Relationship Between Reality And Models:

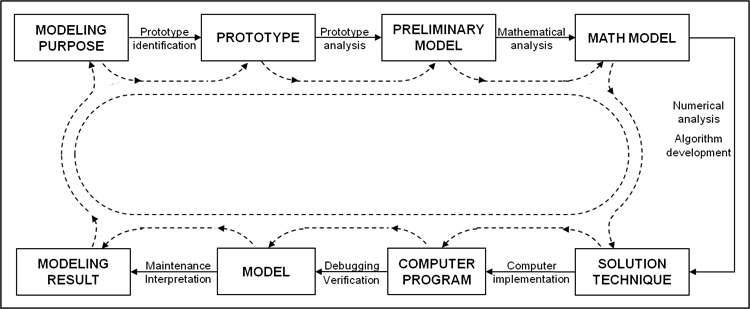

Assessment and modeling activities

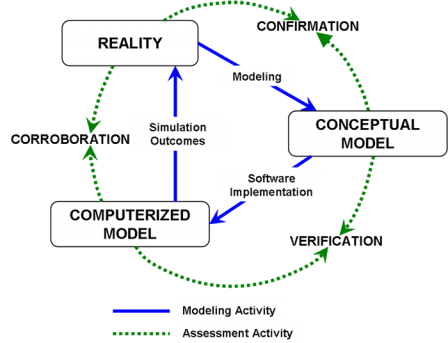

An additional diagram relating assessment and modeling activities (Figure 2); demonstrates the roles of corroboration, confirmation, and verification![]() verificationConfirmation by examination and provision of objective evidence that specified requirements have been fulfilled. In design and development, verification concerns the process of examining a result of a given activity to determine conformance to the stated requirements for that activity. OEI: To evaluate the completeness, correctness, and conformance/compliance of a specific data set against method, procedural, or contractual requirements.

verificationConfirmation by examination and provision of objective evidence that specified requirements have been fulfilled. In design and development, verification concerns the process of examining a result of a given activity to determine conformance to the stated requirements for that activity. OEI: To evaluate the completeness, correctness, and conformance/compliance of a specific data set against method, procedural, or contractual requirements.

Corroboration:

Quantitative and qualitative methods for evaluating the degree to which a model corresponds to reality.

Figure 2. The processes and assessment practices that relate conceptual and computational models with reality; figure modified from DOE (2004).

Definition

Four Stages Of The Model Life-Cycle

The processes included when taking the conceptual understandings of an environmental process to a full analytical model stage are collectively called the model life-cycle. The life-cycle is broken down into four stages:

- Identification of the problem

- Development of the model

- Evaluation of the model performance

- Application

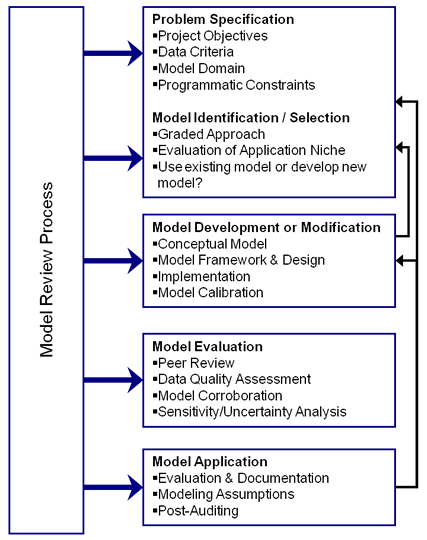

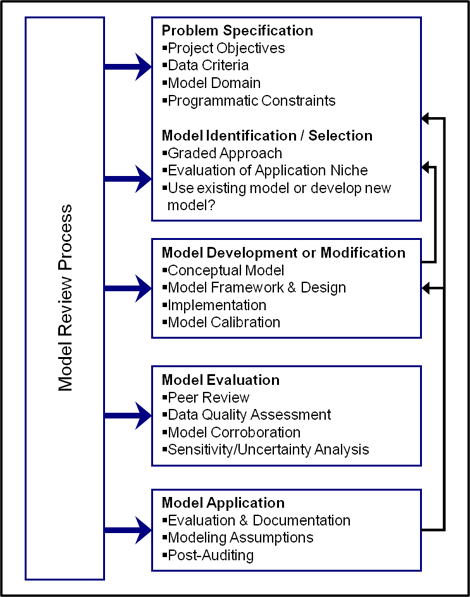

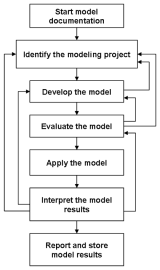

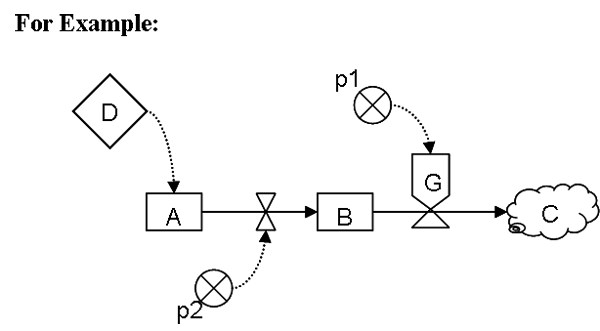

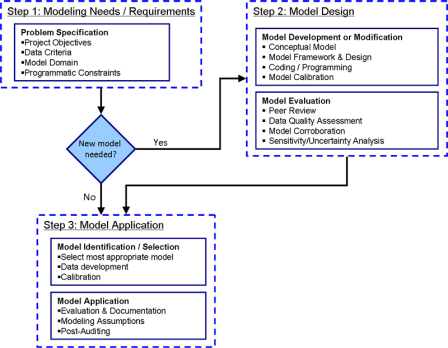

The EPA (2009a) presented a detailed life-cycle schematic depicting the review process occurring at every stage.

A detailed diagram of the model life-cycle. This diagram highlights the role of peer review![]() peer reviewA documented critical review of work by qualified individuals (or organizations)who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an in-depth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. during each stage (EPA, 2009a). (Click image for a larger version).

peer reviewA documented critical review of work by qualified individuals (or organizations)who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an in-depth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. during each stage (EPA, 2009a). (Click image for a larger version).

Four Stages of the Model Life-Cycle:

The life-cycle of a model

The steps of the model life-cycle are interconnected and although often followed in succession, modelers may find themselves using model results to evaluate the model framework revisiting earlier stages of the life-cycle.

Model Framework:

The system of governing equations, parameterization and data structures that represent the formal mathematical specification of a conceptual model consisting of generalized algorithms (computer code/software).

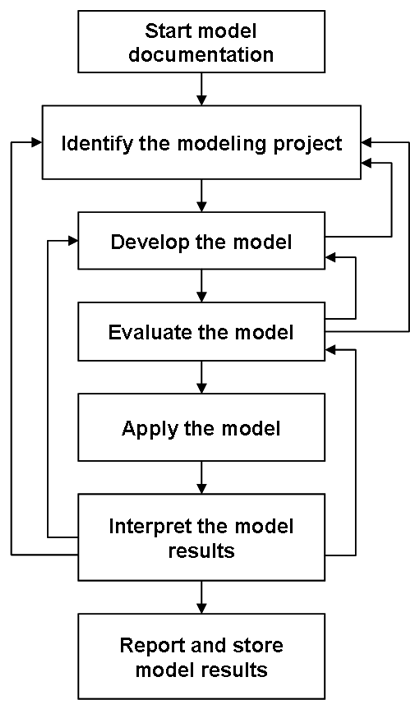

An Alternative Modeling Life-Cycle

A majority of modeling projects do not require the full development of a new model, but rather the application of an existing and established model. In this scenario, the model life-cycle would be shortened to involve model evaluation, application, and post-auditing.

Post-auditing:

Assesses a model's ability to provide valuable predictions of future conditions for management decisions.

In the modified life-cycle, a model is selected that meets the requirements of a specified problem. Once selected, a model may require calibration or different parameter values. Likewise, other qualitative evaluations of the model may further corroborate its application.

After the model has been applied, post-auditing can determine whether the model predictions were observed. The model post-audit process involves monitoring the modeled system, after implementing a remedial or management action, to determine whether the actual system response concurs with that predicted by the model.

Post-audits can also be used to evaluate how well stake-holder and decision-making roles were integrated during the development stages (Manno et al., 2008; EPA, 2009a). All of this information can further inform the model development team.

An Alternate Version of the Model Life-cycle: When model development is not required a modified version of the life-cycle is appropriate. If an existing model will work for the specified problem, model development (and design) is circumvented; leaving three steps to the life-cycle (shown above with dashed lines). The stages of the life-cycle defined by EPA (2009a) appear in the solid boxes. Recall that model evaluation occurs during the Development and Application Stages. (Click on image for a larger version.)

An Alternate Version of the Model Life-cycle: When model development is not required a modified version of the life-cycle is appropriate. If an existing model will work for the specified problem, model development (and design) is circumvented; leaving three steps to the life-cycle (shown above with dashed lines). The stages of the life-cycle defined by EPA (2009a) appear in the solid boxes. Recall that model evaluation occurs during the Development and Application Stages. (Click on image for a larger version.)

Quality Assurance

The quality of a model is governed by the supporting data, model structure, scientific understanding, evaluation, etc. Quality assurance is therefore necessary throughout the stages of the modeling life-cycle.

Quality assurance (QA), quality control, and peer review![]() peer reviewA documented critical review of work by qualified individuals (or organizations)who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an in-depth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. all play important roles in the Agency's modeling efforts. The data that support the development of a model or that are used when running a model are subject to data quality objectives and other QA measures. Similarly, Quality Assurance Project Plans help guide model development, evaluation, and application. Together, quality assurance requirements are the means to overall model transparency.

peer reviewA documented critical review of work by qualified individuals (or organizations)who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an in-depth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. all play important roles in the Agency's modeling efforts. The data that support the development of a model or that are used when running a model are subject to data quality objectives and other QA measures. Similarly, Quality Assurance Project Plans help guide model development, evaluation, and application. Together, quality assurance requirements are the means to overall model transparency.

Model Transparency:

The clarity and completeness with which data, assumptions and methods of analysis are documented. Experimental replication is possible when information about modeling processes is properly and adequately communicated.

Data and Model Quality Assurance

Additional Web Resources

Additional Web Resources

Additional information (including guidance documents) can be found at the Agency's website for the Quality System for Environmental Data and Technology.

Identification Of The Problem (Stage 1 of 4)

The iterative process of model development begins when an environmental problem has been identified and it is determined that model results could inform a decision related to that problem. The identification of this type of problem is most successful when it involves not just model developers, but also the intended users and decision makers – comprising a model development team.

For each problem (and subsequent model) the system needs to be well defined. Recall that a system is a collection of objects and relations among them (EPA, 2009a).

For example, each of the systems shown to the right presents different challenges and application scenarios to the model development team for generic air quality models. The processes captured by each of these models may be similar, but each occurs at a different scale given the scenario.

Global Air Circulation Models

Global Air Circulation Models

Indoor Air Quality Models

Indoor Air Quality Models

Problem Identification Strategies

The CREM Guidance Document (EPA, 2009a) outlines some strategies for problem identification:

- Understand the problem

- Write clear objectives

- Clear statement of purpose

- Potential implications/applications

- What is the purpose of the model?

- How will the model be used?

- What are the results to be used for?

- Define the system

- Temporal and spatial scales

- Process level detail

- Define the model context

- Application niche

Application nicheThe set of conditions under which the use of a model is scientifically defensible. The identification of application niche is a key step during model development. Peer review should include an evaluation of application niche. An explicit statement of application niche helps decision makers to understand the limitations of the scientific basis of the model.

Application nicheThe set of conditions under which the use of a model is scientifically defensible. The identification of application niche is a key step during model development. Peer review should include an evaluation of application niche. An explicit statement of application niche helps decision makers to understand the limitations of the scientific basis of the model. - User community

- Required inputs

- Evaluation criteria

- Application niche

These strategies are a very important part of the model life-cycle. The objectives, defined system, and application niche provide the foundation upon which many decisions made throughout the life-cycle are dependent.

A Modeling Caveat

A Modeling Caveat

The model life-cycle is generally limited to three main stages of Development, Evaluation, and Application. The Identification stage is usually covered within Development; we gave additional attention to it in this module to provide more clarity.

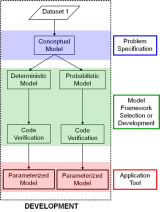

Model Development (Stage 2 of 4)

During the Development Stage of the model life-cycle, the model development team will be challenged with taking scientific understandings of a system and translating them into a conceptual model and eventually a computational model

Computational Model:

Computational models express the relationships among components of a system using mathematical representations (Van Waveren et al., 2000).

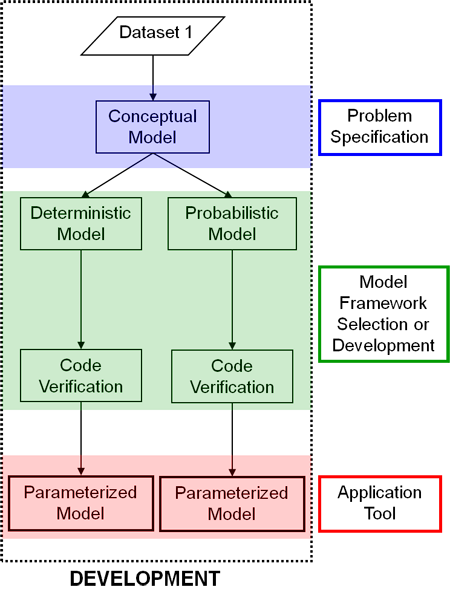

The components of the Development Stage include (EPA, 2009a):

- Problem Specification

- Conceptual Model Development

- Model Framework Selection / Development

- Application Tool Development

Additional Web Resources

Additional Web Resources

For further information please see another module:

Best Modeling Practices: Development

Conceptual Models

After the problem has been identified, model developers construct a conceptual model. Conceptual models are defined as a qualitative or descriptive narrative; a conceptual diagram of a system showing the relationships and flows amongst components. A conceptual model is a working hypothesis of the important factors that govern the behavior of a process of interest (Henderson and O'Neil, 2004; EPA, 2009a).

Interestingly, conceptual diagrams have been used for quite some time in system dynamics studies. Jay Forrester, while at MIT, proposed a method to describe complex feedback processes occurring within a given system; they have since been referred to as Forrester diagrams (Forrester, 1961).

Conceptual model of the AQUATOX model:

Diagram courtesy of the AQUATOX website (Click on image for a larger version)

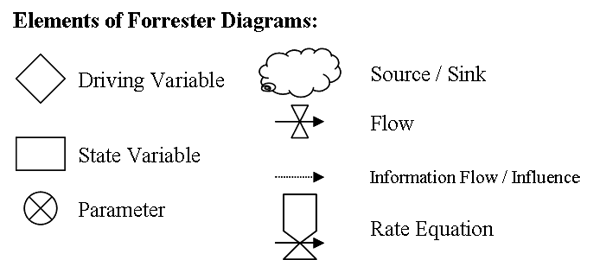

Forrester Diagrams

Forrester diagrams (Forrester, 1961) provide a standard set of shapes to describe the processes, controls, parameters, and variables in a system.

To make a diagram (conceptual model) of your system, the assumptions, state variables, and processes need to be identified first (often relying upon the work in the Identification Stage).

Variables (state or driving) are a measurable or estimated quantity which describes an object or can be observed in a system and is subject to change (EPA, 2009a).

Sources/Sinks are variables that are outside the system of interest. For example, a model describing photosynthesis may require carbon dioxide (CO2) as an input, but treats the supply of CO2 as limitless and constant.

Parameters are fixed values, or constants, that can influence rate equations of flows between variables. The values of parameters may change for each simulation, but remain constant during a simulation (EPA, 2009a).

The example above depicts a simple model showing how the elements of a Forrester Diagram could be used in a conceptual model. In this hypothetical example, a chemical flows from variable A to B, that flow is influenced by parameter p2. The magnitude of variable A is driven by an external variable (i.e. temperature), D. The chemical compound leaves B at the rate of G to the sink C. Parameter p1 influences the rate G. (Click on images for a larger version)

Computational Model

With a conceptual model in place, the model development team should then identify the type of model needed to address the problem.

The model framework is the formal mathematical specification of the concepts and procedures of the conceptual model consisting of generalized algorithms (computer code/software) for different site or problem-specific simulations (EPA, 2009a).

The steps for translating a conceptual model into a computational model include:

- Develop appropriate algorithms

algorithmA precise rule (or set of rules) for solving some problem.

algorithmA precise rule (or set of rules) for solving some problem. - Formulate equations

- Implement equations into computer code

- Choosing hardware platforms and software

- Developing a user interface (if applicable)

- Calibration

CalibrationThe process of adjusting model parameters within physically defensible ranges until the resulting predictions give the best possible fit to the observed data. In some disciplines, calibration is also referred to as "parameter estimation"./parameter determination

CalibrationThe process of adjusting model parameters within physically defensible ranges until the resulting predictions give the best possible fit to the observed data. In some disciplines, calibration is also referred to as "parameter estimation"./parameter determination

Types of Computational Models (EPA, 2009a; 2009b)

Empirical models include very little information on the underlying mechanisms and rely upon the observed relationships among experimental data.

Mechanistic models explicitly include the mechanisms or processes between the state variables.

Deterministic models provide a solution for the state variable(s) without explicitly simulating the effects of data uncertainty or variability.

Probabilistic models utilize the entire range of input data to develop a probability distribution of model output rather than a single point value.

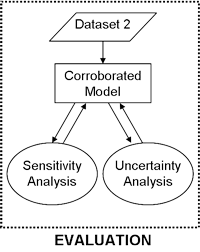

Model Evaluation (Stage 3 of 4)

There are many levels to model evaluation, each representing an integral component contributing to overall model transparency. Model evaluation is the iterative process by which we can determine whether a model and its analytical results are sufficient to agree with known data and to resolve the problem for informed decision making (EPA, 2009a).

Model evaluation begins with theoretical corroboration and can help to answer four questions of model quality, as identified by Beck (2002):

- How have the principles of sound science been addressed during model development?

- How is the choice of model supported by the quantity and quality of available data?

- How closely does the model approximate the real system of interest?

- How does the model perform the specified task while meeting the objectives set by QA project planning?

"Models will always be constrained by computational limitations, assumptions and knowledge gaps. They can best be viewed as tools to help inform decisions rather than as machines to generate truth or make decisions. Scientific advances will never make it possible to build a perfect model that accounts for every aspect of reality or to prove that a given model is correct in all aspects for a particular regulatory application. These characteristics…suggest that model evaluation be viewed as an integral and ongoing part of the life cycle of a model, from problem formulation and model conceptualization to the development and application of a computational tool."

— NRC Committee on Models in the Regulatory Decision Process (NRC, 2007)

Evaluation Components

Keeping in mind that the goal of model evaluation is to ensure model quality and representativeness for the intended application, the fundamental parts of model evaluation are (EPA, 2009a):

- Peer Review: provides a unique mechanism for independent evaluation and review of environmental models used by the Agency.

- QA Project Planning: Data quality assessment is included in the scope of quality assurance (QA) project planning. Data quality assessment informs whether a model has been developed according to the sound science principles.

- Model Corroboration: Evaluating the degree to which the model corresponds to reality. Confirmation can be qualitative (theoretical) or quantitative.

- Sensitivity and Uncertainty Analyses: Sensitivity analysis computes the effect of changes in input values or assumptions (including boundaries and model functional form) on the outputs. Uncertainty analysis is used to investigate the effects of lack of knowledge or potential errors on the model.

The Evaluation Stage of the Model Life-cycle (EPA, 2009a). Peer Review and QA Project Planning are an important part of model evaluation even though they are not depicted in this diagram.

Other Aspects To Consider

Verification: Examination of the algorithms and numerical technique in the computer code to make certain that they truly represent the conceptual model and that there are no inherent numerical problems with obtaining a solution.

- Equations – assure the math is working correctly

- Dimensional analysis – units for all variables and parameters should be congruent

- Compare numerical with analytical solutions

- Steady-state composition and stability

- Compare with alternative coding

Model Simplification: In some instances the conceptual model

may include too much detail which can contribute to model uncertainty. It is up to the development team to define the scope of the model, while considering model parsimony, the goals of the project, and available resources, etc.

A Clarification on Validation and Verification

Model evaluation should not be confused with model validation. Different disciplines assign alternate meanings to these terms and they are often confused. Validated models are those that have been shown to correspond to a specific set of field data. The CREM Guidance Document (EPA, 2009a) prefers the term corroboration and focuses on the processes and techniques for model evaluation rather than model validation or invalidation.

Additional Web Resource:

Additional Web Resource:

For a continuation of the model evaluation topic see: Best Modeling Practices: Evaluation.

Application (Stage 4 of 4)

In the Identification Stage of the model life-cycle, the model development team identified a specific problem that required the support from an analytical model. The model should be applied when the model development team (i.e. decision makers, developers, users) has determined the application is acceptable for use in a decision support capacity.

Important components of the Application Stage include (in no particular order):

- Analysis of the Modeling Scenario(s)

- Applying Multiple Models

- Model Post-Auditing

- Model Transparency

Model Transparency:

The clarity and completeness with which data, assumptions and methods of analysis are documented. Experimental replication is possible when information about modeling processes is properly and adequately communicated.

Analysis Of The Scenario

Early in the life-cycle, the model development team identified a problem that could be informed from a model application. They then defined important aspects of the problem scenario. These should include elements consisting of:

- Clear objective statements

- Potential applications

- The intended use of the modeled results

- Definition of the system

- Temporal and spatial scales

- Process level detail

- Model Context

- Application niche

- User community

- Required inputs

- Evaluation criteria

"All models are wrong. some are useful"

- G.E. Box, 1979

A Modeling Caveat

A Modeling Caveat

Models are typically (and should be) developed for a well defined system – the application niche. The application niche is defined in the Identification Stage. The model is best suited for application within the system it was developed. Therefore, applications outside of the application niche should be considered carefully.

Applying Multiple Models

In certain model application scenarios, there may be multiple competing models. These models often represent varying levels of complexity; each contributes its own strength and weaknesses.

Similarly, stakeholders may present alternative models that have been developed externally (EPA, 2009a). Multiple model comparisons can provide insight into how variable model results are among competing models or how much confidence should be put into results from just one model.

However, only models which are best suited for an application should be tested and not every model needs to be tested in every instance.

Model Post-Auditing

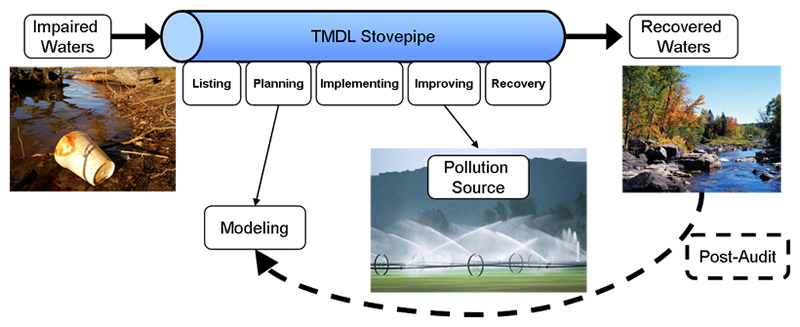

Model corroboration demonstrates how well a model corresponds to measured values or observations of the real system. A model post-audit assesses the ability of the model to predict future conditions (EPA, 2009a).

After a model has been used in decision support, post-auditing would involve monitoring the modeled system to see if the desired outcome was achieved. Post-auditing may not always be feasible due to resource constraints, but targeted audits of commonly used models may provide valuable information for improving models or model parameter estimates.

An example of a post-audit for a TMDL

In this example, modeling was used to help develop a management plan for recovering impaired waters by establishing TMDLs. During implementation of the TMDL, pollution sources were identified and regulated. The outcome (i.e. recovered water) is then compared to the predictions of the model. (Click on image for a larger version)

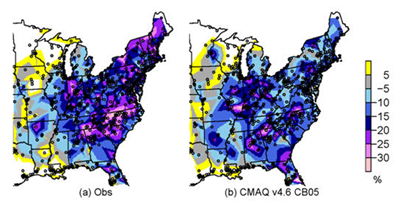

Model Post-Auditing

An example of a post-audit for the CMAQ Model

The Community Multiscale Air Quality (CMAQ) Model: CMAQ model simulation results were evaluated before and after major reductions in nitrogen oxides (NOx) emissions. Adapted from Gilliland et al. (2008). (Click on image for a larger version)

Model Transparency

Models are often referred to as the 'black box' component of the scientific process. That is, the life-cycle of the model is often not transparent to decision makers, stakeholders, or the courts. Through an objective of overall transparency, model development teams can aim to make the model more transparent to stakeholder and user groups (Pascual, 2004).

Beneficial aspects of model transparency include:

- Enables effective communication between modelers, decision makers, and public

- Allows models to be used reasonably and effectively in regulatory decision

- Provides necessary documentation of model assumptions in case of future legal challenges

- Enhances the peer review process

Additional Web Resource:

Additional Web Resource:

For further information on model application see: Best Modeling Practices: Application

Additional Resources:

Additional Resources:

Guidance on the Development, Evaluation, and Application of Environmental Models. 2009. EPA/100/K-09/003. Office of the Science Advisor. US Environmental Protection Agency Washington, DC.

Summary

- The model life-cycle is an on-going and iterative process. There should be multiple evaluation and review activities occurring throughout the entire lifetime of a model.

- The life-cycle is comprised of four stages: Problem Identification, Development, Evaluation, and Application

- Model evaluation is done through peer review processes, quality assurance project planning, code verification, corroboration of results, sensitivity analyses, and uncertainty analyses.

- Steps of model application can include: analysis of the modeling scenario, applying multiple models, model post-auditing, and model transparency.

Summary

Best Practices for the stages of the model life-cycle

The objectives of this module were to introduce the concept of a model life-cycle and practices within each stage. There are additional modules that go into greater detail for the three main stages of the model life-cycle.

Additional Web Resources:

Additional Web Resources:

For further information on these topics, please see:

You Have Reached The End Of The Modeling Life-Cycle Module.

From here you can:

- Continue exploring this module by navigating the tabs and subtabs

- Return to the Training Module Homepage

- Continue on to another module:

- You can also access the Guidance Document on the Development, Evaluation and Application of Environmental Models

References

- Beck, B. 2002. Model evaluation and performance. In A. H. El-Shaarawi and W. W. Piegorsch. Encyclopedia of Environmetrics. Chichester. John Wiley & Sons, Ltd. 1275-1279.

- Box, G. E. 1979. Robustness in the strategy of scientific model building. In. R. L. Launer and G. N. Wilkinson. Robustness in Statistics: proceedings of a workshop. New York. Academic Press: 201-236.

- DOE (US Department of Energy). 2004. Concepts of Model Verification and Validation. LA-14167-MS. Los Alamos, NM. Los Alamos National Laboratory.

- EPA (US Environmental Protection Agency). 1991. Modeling of Nonpoint Source Water Quality in Urban and Non-urban Areas (PDF) (101 pp, 304K, About PDF). EPA-600-3-91-039. Athens, GA. Office of Research and Development.

- EPA (US Environmental Protection Agency). 2009a. Guidance on the Development, Evaluation, and Application of Environmental Models EPA/100/K-09/003. Washington, DC. Office of the Science Advisor.

- EPA (US EnvironmentalProtection Agency). 2009b. Using Probabilistic Methods to Enhance the Role of Risk Analysis in Decision-Making With Case Study Examples DRAFT. EPA/100/R-09/001 Washington, DC. Risk Assessment Forum.

- Forrester, J. 1961. Industrial Dynamics. Waltham, MA. Pegasus Communications.

- Gilliland, A.B., C. Hogrefe, R.W. Pinder, J.M. Godowitch, K.L. Foley, S.T. Rao. Dynamic evaluation of regional air quality models: Assessing changes in O3 stemming from changes in emissions and meteorology. Atmospheric Environment,42, 5110-5123, 2008.

- Henderson, J. E., and O'Neil, L. J. 2004. Conceptual Models to Support Environmental Planning and Operations. SMART Technical Notes Collection, ERDC/TN SMART-04-9, U.S. Army Engineer Research and Development Center, Vickburg, MS.

- Jacoby, S. L. S. and J. S. Kowalik 1980. Mathematical Modeling with Computers. Englewood Cliffs, NJ. Prentice-Hall, Inc.

- Manno, J., R. Smardon, J. V. DePinto, E. T. Cloyd and S. Del Granado. 2008. The Use of Models In Great Lakes Decision Making: An Interdisciplinary Synthesis Randolph G. Pack Environmental Institute, College of Environmental Science and Forestry. Occasional Paper 16.

- NRC (National Research Council) 2007. Models in Environmental Regulatory Decision Making. Washington, DC. National Academies Press.

- Pascual, P. 2004. Building The Black Box Out Of Plexiglass. Risk Policy report 11(2): 3.

- Van Waveren, R. H., S. Groot, H. Scholten, F. Van Geer, H. Wösten, R. Koeze and J. Noort. 2000. Good Modelling Practice Handbook (PDF) (165 pp, 1 MB, About PDF) Exit

- STOWA report 99-05. Leystad, The Netherlands. STOWA, Utrecht, RWS-RIZA, Dutch Department of Public Works.