The Legal Aspects of Modeling: Training Module

PDF version of this training | All modeling training modules

Introduction

The U.S. Environmental Protection Agency routinely uses environmental models to pursue its mission of protecting human health and safeguarding the natural environment (EPA, 2009).

Models have been defined as representations of our understanding of the world, or system of interest; constructed to gain insights into select attributes of a particular physical, biological, economic, or social system (NRC, 2007; EPA, 2009).

It is well understood that these representations [models] are simplifications of reality. Yet, even given this limitation, models are increasingly important analytical tools to inform decision making.

In this module we will explore examples of legal challenges directed at the modeling used to inform Agency decisions and offer best modeling practices to reduce the likelihood of legal challenges.

This module builds upon much of the environmental modeling concepts introduced and discussed in other training modules. For background information please see the CREM Guidance Document (EPA, 2009) or these other modules.

![]()

Additional Web Resources:

The Model Life-cycle

The model life-cycle is given complete discussion in its own module. Throughout this module, we will present legal challenges against the Agency and align them with best modeling practices identified in EPA (2009).

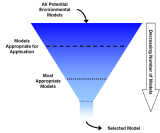

The processes included when taking the conceptual understandings of an environmental process to a full analytical model stage is called the model life-cycle. The life-cycle is coarsely broken down into four stages:

- Problem Identification

- Development of the model

- Evaluation of the model performance

- Application of the model

Sometimes, problem specification is not explicitly included in the life-cycle. Also, in instances where there is an adequate existing model, a step for model selection may be added (see model life-cycle figure). Regardless, all components of the model life-cycle should be well documented and some degree of peer review is an important process throughout the life-cycle.

![]()

Additional Web Resources:

A detailed diagram of the model life-cycle highlighting peer review![]() peer reviewA documented critical review of work by qualified individuals (or organizations) who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an indepth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. of each stage (EPA, 2009). (Click on image for a larger version)

peer reviewA documented critical review of work by qualified individuals (or organizations) who are independent of those who performed the work, but are collectively equivalent in technical expertise. A peer review is conducted to ensure that activities are technically adequate, competently performed, properly documented, and satisfy established technical and quality requirements. The peer review is an indepth assessment of the assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and conclusions pertaining to specific work and of the documentation that supports them. of each stage (EPA, 2009). (Click on image for a larger version)

Quality Assurance

The EPA provides requirements for the conduct of quality management practices, including quality assurance (QA) and quality control (QC) activities, for all environmental data collection and environmental technology programs performed by or for this Agency. The development, evaluation, and use of computer or mathematical models (and their input data) that characterize environmental processes or conditions are covered under this policy (EPA, 2000).

Quality Assurance Project Plans (QAPPs) are an example of a required practice for the Agency’s modeling activities [see for example EPA (2004)]. They can serve as a platform for dialogue (between the Agency and external parties) during the planning process and promote quality and transparency to reduce legal vulnerability from legal challenges. For example, the Region 1 QAPP template contains elements for documenting:

- Model selection and application niche

- Quality of model input data

- Procedures for excluding data, including outdated data

- Initial and final assumptions and bases thereof

- Model validation

- Modeling journal for the entire modeling process

Legal Aspects

When EPA decides to use a model for one or more regulation-relevant purposes, the model normally goes through some internal and external oversight to ensure that it meets scientific, stakeholder, and public approval (NRC, 2007); in accordance with the Administrative Procedure Act (see 5 U.S.C. § 553)

Models used for other regulatory purposes (those outside of rule-makings) are generally not subjected to the extensive internal and external review requirements described above, and in greater detail later in this module. For example, models used for enforcement are generally not required to go through peer review or even public notice-and-comment, but they are required to at least gain judicial acceptance before a court will enter penalties against a violator based on the model

![]()

Additional Web Resources:

EPA must abide by several laws and Executive Orders that guide the process by which regulations are developed. They direct EPA to consider issues of concern to the President, Congress, and the American public. For more information please see:

Legal Challenges

In a general sense, the legal challenges brought pursuant to the Administrative Procedure Act (see 5 U.S.C. § 553) to the Agency’s actions can be classified into two categories: process and substantive challenges (adapted from McGarity and Wagner, 2003; NRC, 2007). Lawsuits against the Agency's use of models typically challenge (NRC, 2007):

- How the model was developed and evaluated (scientific components of the model, performance evaluation, peer review, etc.)

- How and whether it was appropriately applied (the context in which the model was applied, e.g. application niche

application nicheThe set of conditions under which the use of a model is scientifically defensible. The identification of application niche is a key step during model development. Peer review should include an evaluation of application niche. An explicit statement of application niche helps decision makers to understand the limitations of the scientific basis of the model.)

application nicheThe set of conditions under which the use of a model is scientifically defensible. The identification of application niche is a key step during model development. Peer review should include an evaluation of application niche. An explicit statement of application niche helps decision makers to understand the limitations of the scientific basis of the model.) - Technical decisions within the Agency that have important consequences (e.g. economic impacts) on the results from models

Process Challenges

Process challenges are usually directed at the overall transparency of the modeling exercise and the adequacy of any notice and opportunity for public comment that the agency might be required to provide. In its defense, the Agency may contend that judicial review is inappropriate because it has not yet engaged in final agency action or that its action is not yet “ripe” for review.

Substantive Challenges

These challenges are mounted against areas of technical disagreements with the underlying science and assumptions of the model. Courts often consider these challenges in detail; when they discover that the disagreement concerns a battle of the experts, they typically defer to the Agency’s judgment.

Process Challenges

EPA is required by the Administrative Procedure Act (5 U.S.C. § 553) to provide the public notice and an opportunity to comment on its rulemakings. If a rule is supported by a model, this legal obligation means the Agency must provide the public notice of the Agency's use of the model and an opportunity to comment on the assumptions and algorithms that are built into the model, along with the other scientific components of the regulation or rule-making.

Additional Resources:

Guidelines for Ensuring and Maximizing the Quality, Objectivity, Utility, and Integrity of Information Disseminated by the EPA (PDF).(61 pp, 896 K, About PDF) 2002a. EPA/260R-02-008. Office of Environmental Information. Washington, DC.

Examples of Process Challenges

McLouth Steel Products Corp. v. Thomas, 838 F.2d 1317 (D.C. Cir. 1988)

The McLouth Steel Products Corporation (McLouth) petitioned EPA to de-list a waste stream from its list of hazardous wastes. EPA had used a vertical and horizontal spread model (VHS) to predict the leachate levels of the hazardous components of McLouth's waste.

McLouth argued that EPA had never subjected the model to public notice and comment and challenged the use of the model in this very limited rulemaking proceeding. The court agreed, rejecting EPA's contention that the model [use] was just a policy statement and not a legislative rule. The court remanded the matter to the EPA and held that EPA gave the effect of a rule to its VHS model without having exposed the model to the comment process required for rules.

Chemical Manufacturers Assn. (CMA) v. U.S. EPA, 28 F.3d 1259 (D.C. Cir. 1994)

McLouth Steel Products Corp. v. Thomas, 838 F.2d 1317 (D.C. Cir. 1988)

Chemical Manufacturers Association (CMA) argued that EPA's decision to remove sampling of actual emissions (to compare against reference values) had the effect of hinging the Agency's decision entirely upon reference values and predicted results from generic air dispersion model. Furthermore, CMA argued that the Agency had not given public notice that it would give so much weight to the database and model for the final rule.

The court determined that EPA had adequately subjected the generic air dispersion model to notice-and-comment rulemaking and in doing so had explained the basis for the model, stated its rationale for making various assumptions, requested comments on the assumptions, and revised some modeling parameters based upon the comments it received.

Best Modeling Practices

The best modeling practices that can be learned from these examples are transparency and peer review. A complete model history documenting the justification for various decisions related to model design and developmentmay help the agency defend a model against formal challenges (NRC, 2007). The Agency has established guidance for peer review (EPA, 2006). Transparency can be fostered by using and following a Quality Assurance Project Plan (see EPA, 2010 or EPA's Quality System for Environmental Data and Technology).

Substantive Challenges

Substantive challenges have been mounted against areas of technical disagreements with the underlying science and assumption of a model. Courts often consider these challenges in detail. Deference is particularly appropriate if the decision requires significant expertise, such as where the decision involves scientific facts in the Agency's area of expertise, or where the decision is on the frontiers of science or in an area of significant scientific uncertainty. See, for example, Baltimore Gas & Elec. Co. v. NRDC, 462 U.S. 87, 103 (1983):

“[A] reviewing court must remember that the Commission is making predictions, within its area of special expertise, at the frontiers of science. When examining this kind of scientific determination, as opposed to simple findings of fact, a reviewing court must generally be at its most deferential.”

The substantive challenges may be described as:

- Challenges to the scientific components of the model

- Challenges to the evaluation process of a model

- Challenges related to the appropriateness of the Agency's application of a model within the larger context

This is also shown in a previous figure outlining the regulation and research processes.

The Substantive Challenges:

Scientific Components

Legal challenges to the scientific components of a model may be related to the scientific assumptions (often simplifications), the data quality or quantity used to develop the model, or adjustments made to the model.

Evaluation Process

Model evaluation is an iterative process by which we can determine whether a model and its analytical results are sufficient to agree with known data and to resolve the problem for informed decision making (EPA, 2009). Legal challenges are typically made to the validity of the model (e.g. whether it had been corroborated against sufficient data before application) or the findings of the peer review process (these are challenges to the conclusions of peer review, not the process of peer review).

Model Application

Other challenges are made against the context in which the model was applied. This can include departures from prior model applications, alternative models, or inadequate explanation of the final output.

Substantive Challenges: Peer Review

The peer review process is an essential practice throughout the life-cycle of a model. Legal decisions (provided as examples throughout this module) highlight the importance of appropriate scientific assumptions of the models which are supported by peer review.

One of the best practice recommendations from EPA (2009) is for model developers and users to subject their model to credible, objective peer review throughout the model life-cycle.

Peer review is a process that ensures the model meets scientific, stakeholder, and other external approval. The process provides an independent, expert review of model development, evaluation, and application. Therefore, its purpose is two-fold:

- To evaluate whether the assumptions, methods, and conclusions derived from environmental models are based on sound scientific principles.

- To check the scientific appropriateness of a model for informing a specific regulatory decision. (The latter objective is particularly important for secondary applications of existing models.)

Additional Resource:

Peer Review Handbook. 2006. EPA/100/B-06/002. Science Policy Council, US Environmental Protection Agency Washington, DC.

Substantive Challenges: Underlying Assumptions

Models are often designed to be as simple as possible, which opens them to challenges against the degree of simplification or the validity of a model's assumptions. The following cases are illustrative:

- American Forest & Paper Ass'n v. U.S. EPA

- Chlorine Chemistry Council v. U.S. EPA

- Appalachian Power Co. v. U.S. EPA

- Mision Indus., Inc. v. U.S. EPA

EPA (2009) recommends clearly defining and documenting the scientific assumptions made during model development. A common way to communicate the assumptions and guidelines for model application is through a model “user’s guide.” or modeling journal (e.g. EPA, 2010) (DOC)(16 pp, 395 K) . Another best practice during model development is problem specification and ensuring that the scope and level of detail of the model meet the needs of the decision.

American Forest & Paper Assn v. U.S. EPA, 294 F.3d 113 (D.C. Cir. 2002)

American Forest and Paper Association challenged EPA's reliance on conservative assumptions regarding how to extrapolate from toxicity studies on animals to humans. These assumptions were pivotal to EPA's refusal to delete methanol from the list of hazardous air pollutants under the Clean Air Act. The court rejected this challenge, finding that EPA's assumptions were well supported and fully justified and therefore not arbitrary or capricious.

Chlorine Chemistry Council v. U.S. EPA, 206 F.3d 1286 (D.C. Cir. 2000)

Petitioners sought review of an EPA order that set the maximum contaminant level goal for chloroform at zero under the Safe Drinking Water Act, contending that EPA had not considered the best available evidence in arriving at that figure. The D.C. Circuit set aside EPA's assumption of a linear dose-response model for determining the carcinogenicity of chloroform because of a developing consensus by a scientific expert panel that chloroform was not harmful at low doses (this would not be true of a linear dose-response assumption).

Appalachian Power Co. v. U.S. EPA (II), 249 F.3d 1032 (D.C. Cir. 2001)

Appalachian Power Company successfully challenged the Agency's use of a model for predicting growth rates of electricity usage in setting emissions controls. The court found that the assumptions of the model – and the subsequent predictions of a decrease in power consumption – were arbitrary because they were not supported by the available evidence.

However, the court did note that EPA had the authority to develop generic, abstracted models for such predictions but the assumptions need to be based on the best available evidence.

Mision Indus., Inc. v. U.S. EPA, 547 F.2d 123 (1st Cir. 1976)

Petitioners challenged EPA's use of an air diffusion model on the basis that it was inadequate because it did not account for terrain turbulence and only included a limited number of weather stations. The court found that the Agency's justifications for the simplifications were sufficient and rejected the challenge.

Substantive Challenges: Data and Statistics

Legal challenges against the data that support the modeling process are rarely successful. Typically the courts have deferred to the Agency's expertise in the area of technical decision making. Challenges within this category have been further described as (McGarity and Wagner, 2003):

- Unrepresentative Data

- Flawed Studies or Calculations

- Outdated Studies

- Data Are Wrongly Excluded

- Data Are Insufficient for a Particular Application

- Statistical Decisions

Best Practices: During model development the data requirements of the model should be clearly identified as well as the criteria for selecting data (EPA, 2009). The evaluation and performance criteria should also be established and justified before model application.

American Iron & Steel Institute v. U.S. EPA, 115 F.3d 979 (D.C. Cir. 1997)

Industry groups challenged a Final Water Quality guidance arguing that the Agency impermissibly provided that regulation could be based on only one measurement of a discharge in determining the concentration of pollutants in effluent, rather than requiring multiple data sets that better represented variability. The court rejected the challenge because EPA had, among other things, conditioned the regulation on the requirement that the permitting authority first make a finding that the single measurement was valid and representative.

American Iron & Steel Institute v. U.S. EPA, 115 F.3d 979 (D.C. Cir. 1997)

The EPA's selection of a confidence interval for determining permissible effluent quality or monthly maximum effluent limitations was upheld because the Agency adequately explained its selection and demonstrated that it was consistent with the statute and past EPA practices.

Mision Indus., Inc. v. U.S. EPA, 547 F.2d 123 (1st Cir. 1976)

The court deferred to EPA's use of an air diffusion model that was afflicted with a large statistical potential for error. The court found that the Agency had adequately explained that the errors were generally high only with respect to short-term concentrations and that the model's conservative assumptions compensated for the errors.

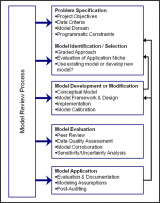

Substantive Challenges: Model Evaluation

Other types of legal challenges focus on whether the model has been shown to be valid for a particular application. In this context,validation is a process of determining whether a model has been shown to correspond to a specific set of field data (Van Waveren et al., 2000). The EPA (2009) recommends using the process of model evaluation (corroboration, peer review, etc.) rather than model validation or invalidation when applying an appropriate model.

Generally, courts have deferred to the Agency's expertise when deciding whether site-specific corroboration should be conducted before a model is applied.

![]()

Additional Web Resource

For more discussion of model evaluation please see the module titled: Best Modeling Practices: Model Evaluation

Ohio v. U.S. EPA, 784 F.2d 224 (6th Cir. 1986)

EPA applied the CRSTER model to predict the dispersion of emissions from two power plants located on Lake Erie. Even though the model had been corroborated in the past, petitioners objected to its application. The court concluded that EPA had not adequately shown that the CRSTER model took into account the meteorological and geographic problems of the two power plants. The court further concluded that it was therefore arbitrary and capricious for EPA to allow a 400% increase in emissions without evaluating, validating, or empirically testing the model at the site. EPA was ordered to develop a plan for corroborating the CRSTER estimates of emissions from the two plants.

Substantive Challenges: Model Application

The process of model application should be preceded by documented decisions regarding model selection when multiple models exist. During model application, results from the model are used to inform a decision or regulation.

The types of these challenges can be described as:

- The Agency's use of a model that constitutes a significant departure from prior model applications

- The existence of alternative models that presumably provide different results

- Natural Resources Defense Council v. Muszynski

- Chemical Manufacturers Assn. v. U.S. EPA

- Instances when the Agency does not provide adequate explanation of the model results

Best Practices

Quality assurance plans provide the appropriate documentation that can increase model transparency. Effective communication of the modeling results and how they were included in the decision-making or regulatory process also contributes to overall transparency; thus reducing overall legal vulnerability.

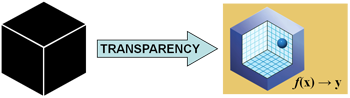

Model Application

Model application (i.e., model-based decision making) is strengthened when the science underlying the model is transparent. The elements of transparency emphasized in the CREM Guidance Document (EPA, 2009) are:

- Comprehensive documentation of all aspects of a modeling project (suggested as a list of elements relevant to any modeling project)

- Effective communication between modelers, analysts, and decision makers

![]()

Additional Web Resource:

Model application is discussed in detail in the following module: Best Modeling Practices: Application.

Natural Resources Defense Council v. Muszynski, 268 F.3d 91 (2d Cir. 2001)

EPA successfully defended a challenge mounted by various environmental groups to a model used in its total maximum daily loads (TMDL) for phosphorus in eight New York reservoirs. The Agency explained that it was basing its decision on the best available scientific information and that it intended to refine its model as better information surfaced.

The 2nd Circuit upheld the district court's ruling that the Clean Water Act did not require TMDLs to be expressed in terms of daily loads thus agreeing with EPA that a "total maximum daily load" may be expressed by another measure of mass per time, where such an alternative measure best served the purpose of effective regulation of pollutant levels in New York's waterbodies, However, the courts remanded to EPA for further explanation of why annual loads were appropriate in the case of New York's phosphorus TMDLs.

Chemical Manufacturers Ass'n v. U.S. EPA, 28 F.3d 1259 (D.C. Cir. 1994)

The Chemical Manufacturers Association challenged EPA's use of a generic air dispersion model to predict the concentrations of an air toxin (Methylene Diphenyl Isocyanate, MDI) in the ambient air surrounding emitting facilities. The court found that EPA's application of the model to MDI emissions was arbitrary as EPA's explanation provided no "rational relationship between the model and the known behavior of the hazardous air pollutant to which it is applied." Id. at 1265

Additional Key Findings

In addition to the potential process and substantive legal challenges defined in this module, there are also some significant rulings related to the Agency's use of environmental models to support decision making; documented to the right.

Courts generally uphold the agency's choice of a scientific model if it bears a rational relationship to the characteristics of the situation or data to which it is applied. However, the Agency must explain the rationale and factual basis for its decision (see Bowen v. American Hospital Assn., 106 S.Ct. 2101; Pascual, 2009).

Pascual (2009) explains the ruling process:

"A court will rule that an agency has acted arbitrarily and capriciously if the agency: reached a decision based on factors the US legislature did not intend; failed to consider an important aspect of the problem; or offered explanations that run counter to the evidence or that are so implausible that the explanations cannot not be ascribed to agency expertise or to a difference in view."

Sierra Club v. U.S. Forest Serv., 878 F. Supp. 1295, 1310, 25 (D.S.D. 1993)

"As long as an agency reveals the data and assumptions upon which a computer model is based, allows and considers public comment on the use or results of the model, and ensures that the ultimate decision rests with the agency, not the computer model, then the agency use of a computer model to assist in decision making is not arbitrary and capricious." Id. at 1310.

American Iron & Steel Inst. v.U.S. EPA, 115 F.3d 979 (D.C. Cir. 1997)

Courts will invalidate the use of a model if there is no rational relationship between the model chosen and the situation to which it is applied.

Small Refiner Lead Phase-Down Task Force v. U.S. EPA, 705 F.3d 506 (D.C. Cir. 1983)

"Any model is an abstraction from and simplification of the real world. . . . We will . . . look for evidence that the agency is conscious of limits of the model. Ultimately, however, we must defer to the agency's decision on how to balance the cost and complexity of a more elaborate model against the oversimplification of a simpler model. We can reverse only if the model is so oversimplified that the agency's conclusions from it are unreasonable." Id. at 535.

Summary

Generally, a complete model history [e.g. a model journal sensu Van Waveren et al. (2000) or EPA (2010)], that documents the justification for various decisions related to model design, development, evaluation, and application may help the agency defend a model against formal legal challenges (NRC, 2007) by increasing overall transparency of the model.

- The Agency’s use of models can be subjected to both Process and Substantive Challenges

- Process challenges are usually directed at the overall transparency of the modeling exercise and the adequacy of any notice and opportunity for public comment that the agency might be required to provide

- Substantive challenges include those that question the assumptions; the supporting data quality;evaluation of a model; and the context in which it was applied.

Models are often referred to as the 'black box' component of the scientific process. That is, the life-cycle of the model is often not transparent to decision makers, stakeholders, or the courts. Quality assurance plans are the means to increasing transparency. Through an objective of overall transparency, modeling teams can aim to make the model more transparent to stakeholders and user groups and strengthen the Agency’s ability to successfully respond to legal challenges (Pascual, 2004).

Models are often referred to as the 'black box' component of the scientific process. That is, the life-cycle of the model is often not transparent to decision makers, stakeholders, or the courts. Quality assurance plans are the means to increasing transparency. Through an objective of overall transparency, modeling teams can aim to make the model more transparent to stakeholders and user groups and strengthen the Agency’s ability to successfully respond to legal challenges (Pascual, 2004).

Recommendations

The NRC (2007) suggests that a more rigorous and formalized approach to model development, evaluation, and application may ward off legal challenges.

Suggested best practices of the model life-cycle are defined by EPA (2009). In general, proper documentation during the model life-cycle can facilitate enhanced peer review and increase the transparency of 'black-box' models. Peer review is an essential part of the modeling life-cycle that ensures the model meets scientific, stakeholder, and other external approval. Proper documentation throughout the model life-cycle ensures transparency and enhances peer review.

The other modules developed from this guidance document provide an in-depth explanation of the best practices, with case studies when available.

You Have Reached the End of the Legal Aspects of Environmental Modeling Training Module

From here you can:

- Continue exploring this module by navigating the tabs and sub tabs

- Return to the Training Module Homepage

- Continue on to another module:

- You can also access the Guidance Document on the Development, Evaluation and Application of Environmental Models, March, 2009.

References

- Administrative Procedure Act. 1946. U.S. Code. Title 5, Part I, Chapter 5. Report of the House Judiciary Committee, No. 1989, 79th Congress.

- Beck, B., L. Mulkey and T. Barnwell. 1994. Model Validation for Exposure Assessments. DRAFT. Athens, GA: US Environmental Protection Agency.

- EPA (US Environmental Protection Agency). 2000. EPA Quality Manual for Environmental Programs (PDF).(63 pp, 382 K, About PDF) CIO-2105-P-01-0. Washington, DC. Office of Environmental Information.

- EPA (U.S. Environmental Protection Agency). 2002. Guidelines for Ensuring and Maximizing the Quality, Objectivity, Utility, and Integrity of Information Disseminated by the Environmental Protection Agency (PDF).(61 pp, 895 K, About PDF) EPA/260R-02-008. Washington, DC. Office of Environmental Information.

- EPA (U.S. Environmental Protection Agency). 2004. The Lake Michigan Mass Balance Project: Quality Assurance Plan for Mathematical Modeling. EPA-630-R-04-018. Duluth, MN. National Health and Environmental Effects Research Laboratory, Office of Research and Development.

- EPA (US Environmental Protection Agency). 2006. Peer Review Handbook. EPA/100/B-06/002. Washington, DC. Science Policy Council.

- EPA (US Environmental Protection Agency). 2009. Guidance on the Development, Evaluation, and Application of Environmental Models. EPA/100/K-09/003. Washington, DC. Office of the Science Advisor.

- EPA (US Environmental Protection Agency). 2010. Modeling Journal: Checklist and Template (DOC)(16 pp, 395 K) . New England Office, New England Regional Laboratory's Office of Environmental Measurement and Evaluation, Quality Assurance Unit.

- Fisher, E., P. Pascual and W. Wagner. 2010. Understanding Environmental Models in Their Legal and Regulatory Context. Journal of Environmental Law 22(2): 251-283.

- McGarity, T. O. and W. E. Wagner 2003. Legal Aspects of the Regulatory Use of Environmental Modeling. Environmental Law Reporter 33(10): 10751-10774.

- NRC (National Research Council) 2007. Models in Environmental Regulatory Decision Making. Washington, DC. National Academies Press.

References (Continued)

- Pascual, P. 2004. Building The Black Box Out Of Plexiglass. Risk Policy Report 11(2): 3.

- Pascual, P. 2009. Evidence-based decisions for the wiki world. International Journal of Metadata, Semantics and Ontologies 4(4): 287-294.

- Van Waveren, R. H., S. Groot, H. Scholten, F. Van Geer, H. Wösten, R. Koeze and J. Noort. 2000. Good Modelling Practice Handbook (PDF) Exit (165 pp, 1 MB, About PDF). STOWA report 99-05. Leystad, The Netherlands. STOWA, Utrecht, RWS-RIZA, Dutch Department of Public Works.

Cases Presented in Order of Appearance

- McLouth Steel Products Corp. v. Thomas 838 F.2d 1317, (D.C. Cir. 1988)

- Chemical Manufacturers Ass'n v. U.S. EPA 28 F.3d 1259, (D.C. Cir. 1994)

- Baltimore Gas & Elec. Co. v. NRDC, 462 U.S. 87, 103 (1983)

- American Forest & Paper Ass'n v. U.S. EPA 294 F.3d 113, (D.C. Cir. 2002)

- Chlorine Chemistry Council v. U.S. EPA, 206. F.3d 1286 (D.C. Cir. 2000)

- Appalachian Power Co. v. U.S. EPA (II) 249 F.3d 1032, (D.C. Cir. 2001)

- Mision Indus., Inc. v. U.S. EPA 547 F.2d 123, (1st Cir. 1976)

- American Iron & Steel Institute v. U.S. EPA 115 F.3d 979, (D.C. Cir. 1997)

- Ohio v. U.S. EPA 784 F.2d 224, (6th Cir. 1986)

- Natural Resources Defense Council v. Muszynski, 268 F.3d 91 (2d Cir. 2001)

- Bowen v. American Hospital Assn., 106 S.Ct. 2101 (1986)

- Sierra Club v. U.S. Forest Serv., 878 F. Supp. 1295, (D.S.D. 1993)

- Small Refiner Lead Phase-Down Task Force v. U.S. EPA 705 F.2d 506, (D.C. Cir. 1983)