EPA Science Matters Newsletter: Volume 2, Number 4

Published August 2011

Executive Message

- Executive Message: Advancing Science at EPA

A message from Paul T. Anastas, Ph.D., Assistant Administrator, EPA Office of Research and Development

Building a sustainable world—one that meets the needs of today’s generation while preserving the ability of future generations to meet theirs—is the single most important challenge of our time.

Albert Einstein once said that “problems can’t be solved at the same level of awareness that created them.” Indeed , in order to address and overcome the challenges we face, we must obtain greater awareness, more knowledge, and new information. For our pursuit of a sustainable world, this means constantly questioning our approaches and always seeking out innovative solutions for improvement.

While continuing to advance the kinds of cutting edge research efforts that are the hallmark of EPA, we also are taking ambitious steps to ensure that we are as effective as we can be for the American people. These steps, known collectively as the “Path Forward,” ensure that all of EPA’s scientific work is conducted in a way that advances the goal of sustainability. In its common definition, sustainability means meeting the needs of the current generation while preserving the ability of future generations to meet their needs. At EPA, it means doing all that we can to not only protect Americans today, but also to ensure a healthy environment for our children, their children, and beyond. Achieving this ambitious goal will require the best minds to work together across disciplines in an atmosphere of innovation and creativity. It will also require broad thinking and a holistic, “systems” perspective to ensure that our science and research today can advance EPA’s mission into the future.

We are doing just that. By bringing together experts from nearly every environmental discipline, we are building upon our demonstrated excellence in measuring, monitoring, and assessing environmental problems to inform and empower sustainable solutions. We are breaking down traditional scientific silos by forging truly transdiciplinary research areas. We are establishing programs to encourage transformative innovations that simultaneously reduce environmental risk and grow the economy. We are developing new ways to communicate and maximize our impact, and we are engaging with experts, stakeholders, and communities like never before. We have embraced the goal of sustainability and, as a result, are more effectively conducting research for human health and environmental protection across the United States.

We have spent the past year and a half working to align all of our work with the concept of sustainability. These efforts have spanned across the entire spectrum of our work, from the structure of our research programs, to the nature of our interactions with programs and regions, to new internal awards, projects, trainings, and communication strategies. On October 1, we will produce a research strategic plan that will be the culmination of these efforts. Our scientists and engineers have worked with partners inside and outside the agency to make this plan responsive to pressing environmental challenges. Accomplishing the science and technology tasks included in this plan will play an important role in safeguarding human health and the environment.This is an exciting time for EPA. Our focus on Sustainability will ensure that we not only continue our important work protecting the American people and their environment, but that we do it in a way that preserves the health of our economy and future generations.

Paul T. Anastas, Ph.D

Assistant Administrator

U.S. EPA, Office of Research and DevelopmentLearn More

Executive Message

- Game On: Can Serious Games Help Inform Serious Environmental Challenges?

EPA researchers partner with IBM to provide data and science for CityOne, a serious game with real-world potential.

By 2050, the population of the world’s cities is expected to double, meaning some two-thirds of the world’s population is expected to live in cities. What will the quality of life be in such burgeoning metropolises? Will they be thriving models of efficiency, with diverse, robust economies and plentiful municipal services delivering electricity, drinking water, and other necessities through cutting-edge technologies and “smart grid” networks?

Or will tomorrow’s cities look more like larger, more crowded versions of some of today’s most challenging places to live: sprawling urban areas marred by stifling traffic, poor air quality, and overburdened, leaky public water infrastructure?

Delivering the right answers to what it will take to build the livable cities of tomorrow is the focus of a host of research in fields as diverse as economics, civil engineering, sociology, clean air, energy, and human health risk assessment. It’s also the focus of a game that takes advantage of real world EPA research and engineering efforts.

The game, called CityOne, is in a growing genre of “serious games” developed as experiential learning tools to help users gain valuable insights into complex processes and systems. EPA’s involvement was sparked by scientists who recognized the opportunity to make environmental data generated by Agency-developed computer models more accessible to decision makers, and to develop more user interfaces for the models.

“I realized that the core concept of the game was designed to do what we want to do with much of our research—give decision makers a more informed understanding of the environmental impacts of the decisions they have to make in a very complex and dynamic world. If done correctly, the use of serious games can help non-scientists experience the ways in which their policy, investment, or purchase decisions can result in benefits or impacts to the environment and human health,” says EPA scientist Andy Miller, Ph.D., who approached CityOne developers after learning about their project.

Introduced at the IMPACT software conference, the game begins by placing users in the shoes of a Chief Operating Officer of an urban company and challenging him or her to successfully advise consultants on how to “revamp and revitalize” the energy grid, retail sectors, banking, and water utilities of the city. Nancy Pearson, IBM vice president of Service Oriented Architecture, Business Process Management, and WebSphere adds "CityOne will simulate the challenges faced in a variety of industries so that businesses can explore a variety of solutions and explore the business impact before committing resources."

CityOne urges the user to not only take into account monetary and structural factors, but to also consider business climate, citizen quality of life, and the environmental impacts of any actions taken.

According the game’s web site, the main goals of CityOne are to allow its user to “accelerate industry evolution, optimize processes and decisions, implement new technologies, and discover the impact” of their decisions.

The game presents challenges such as how to balance emissions and energy generation, and how to improve upon water systems that typically lose half their water to leaks before anything flows into homes and businesses. EPA’s own research includes extensive efforts on aging water infrastructure, and on the complex interactions of air quality, energy, and climate. EPA provides links to authentic research information as part of the game’s library.

In addition to supplying real world data and expertise, EPA work is also prevalent through hyperlinks that provides interested gamers and others with a source for those interested in learning more about modern, complex environmental problems.

CityOne will also draw awareness to EPA’s programs, providing additional info to CityOne users on environmental protection and associated issues. “EPA has a tremendous amount of environmental information that is available to anyone who is interested. The game gives us a way to reach out to a broader audience and let them know about the resources that they can draw upon when they want to dig deeper into an issue,” says Dr. Miller.

Ultimately, this “serious game” has serious real world potential. The interactive game will provide industry and civic leaders with the means to build a better understanding of complex problems and to begin to make more sustainable decisions for the future. By building better awareness of ongoing research programs, Agency scientists think the partnership will prove to be a real winner.

Learn More

- Down the Drain: Wetlands as Sinks for Absorbing Reactive Nitrogen

EPA scientists conduct the first continental-scale analysis to estimate how much nitrogen is removed by wetland ecosystems across the U.S.

Duck hunters and those who fish, both recreationally and commercially, are already well acquainted with the value of wetlands. The economic benefits alone include providing essential habitat to some 75 percent of the fish and shellfish that are commercially harvested in the U.S. On top of that, wetlands absorb storm runoff, help prevent flooding, and naturally filter water.

EPA researchers are working to quantify another important value of wetlands: the ability to act as natural sinks that absorb “reactive” nitrogen (Nr). While perhaps not as obvious as providing sought-after recreational destinations or habitat for valuable fisheries, wetlands’ natural ability to absorb nitrogen released into the environment can be just as important.

As one of life’s “essential elements,” nitrogen is required for the normal growth and maintenance of all biological tissue. The development and widespread use of nitrogen-based fertilizers helped revolutionize agriculture, dramatically increasing crop yields and food production.

But too much nitrogen can mean trouble.

Released into the environment through agricultural practices and as a byproduct from sewage treatment and the combustion of fossil fuels, reactive nitrogen can lead to complex and far-reaching environmental and human health problems. Nitrogen carried off croplands by storm water runoff can harm aquatic systems even far downstream, sparking the growth of oxygen-depleting algae and leading to harmful algal blooms and hypoxia (low oxygen). Excess nitrogen in the form of nitrate is a drinking water contaminant and an increasing health concern.

As hotspots of biological productivity, especially for plants, algae, and microorganisms that absorb nitrogen as they grow, wetlands serve as natural “sinks” for removing and storing reactive nitrogen as it cycles through the environment in one form or another.

EPA researchers are exploring innovative ways to better quantify the benefits—what they refer to as “ecosystem services”—that wetlands and other natural systems provide to society. As part of that effort, Agency scientist Stephen J. Jordan, Ph.D. and his partners recently compiled a comprehensive database on nitrogen removal from the scientific literature on wetland studies. He and his colleagues conducted an extensive search across a host of scientific publications, and then added supplemental data through strategic internet-based queries reaching as far back as 1970. “Looking at nitrogen removal across such a large geographic scale had never been done before,” says Jordan.

After identifying between 400 to 500 journal articles, Jordan and his team analyzed the data through statistical and quality assurance methods, building a robust dataset based built from 190 separate studies. Using latitudes and other environmental data recorded in the studies, along with the extent of major wetland classifications from the National Wetlands Inventory, the researchers were able to base their overall analysis across the entire contiguous U.S.

Finally, the team was able to use statistical analyses to calculate the estimated total amount of reactive nitrogen removed by wetlands across the continent. “Having such a large set of data made a huge difference, allowing us to tease out differences that emerged from looking at nitrogen removal across a broad scale,” explains Jordan.

The researchers estimated that the major classes of wetlands found across the contiguous U.S. removed approximately 20-21% of the total amount of reactive nitrogen added to the environment by human activities. That adds up to some 5,803,140 metric tons of nitrogen removed before it could taint drinking water or contribute “dead zones” off the coast by sparking algal blooms.

Extrapolating further, the researchers found that wetlands remove roughly 17% of worldwide human-caused nitrogen releases, at least 26 metric tons.

The team’s findings were recently presented in the scientific journal Ecosystems (Jordan, S., Stoffer, J., & Nestlerode, J., 2010. Wetlands as Sinks for Reactive Nitrogen at Continental and Global Scales: A Meta-Analysis Ecosystems Exit, 14 (1), 144-155 DOI).

The results of the research add considerable data that can be added to the “balance sheet” of ecosystem services provided by wetlands. And as some 50% of the worlds’s historic wetlands have been lost to human activities, there is a growing need to quantify and understand their true value so that decision makers, land use planners, and water quality managers can better protect human health and the environment.

Learn More

- Innovative Tools Help EPA Scientists Determine Total Chemical Exposures

EPA scientists work to advance the science of chemical risk assessment.

Everyday activities – actions as simple as biting into an apple, or walking across a carpeted floor – may expose people to a host of chemicals through a variety of pathways. The air we breathe, the food and water we consume, and the surfaces we touch all are the homes of natural and synthetic chemicals, which enter our bodies through our skin, our digestive systems, and our lungs.

This makes determining how (and how much of) certain chemicals enter our bodies challenging. In most cases, there is not one single source for any given chemical that may be found in our bodies. Using sophisticated computer models and methods, EPA scientists have developed an innovative set of tools to estimate total exposures and risks from chemicals encountered in our daily lives.

“The traditional approach of assessing the risk from a single chemical and a single route of exposure (such as breathing air) may not provide a realistic description of real-life human exposures and the cumulative risks that result from those exposures,” said EPA scientist Dr. Valerie Zartarian. “Risk assessments within EPA are now evolving toward the ‘cumulative assessments’ mandated by the Food Quality Protection Act and the Safe Drinking Water Act.”

Moving the science of chemical risk assessments forward to where it’s possible to evaluate total risks from exposures to a wide variety of chemicals requires several key pieces of information. You need to know what chemicals are found in the environment, their concentration levels in the environment, and how they come into contact with humans. You also have to know how they enter the body, and what they do after that.

EPA’s Stochastic Human Exposure and Dose Simulation (SHEDS) model addresses the first part of this problem. SHEDS can estimate the range of total chemical exposures in a population from different exposure pathways (inhalation, skin contact, dietary and non-dietary ingestion) over different time periods, given a set of demographic characteristics. The estimates are calculated using available data, such as dietary consumption surveys; human activity data drawn from EPA's Consolidated Human Activities Database (CHAD); and observed chemical levels in food, water, air, and on surfaces like floors and counters.

The data on chemical concentrations and exposure factors used as inputs for SHEDS are based on measurements collected in EPA field studies and published literature values. "EPA’s observational exposure studies have also provided information and data to help define the processes simulated in the model, and evaluate or "ground-truth" SHEDS model estimates", said Zartarian, "who co-developed the model with Dr. Jianping Xue, Dr. Haluk Ozkaynak, and others."

“The concept of SHEDS is to first simulate an individual over time,” she explained. “The model calculates that individual’s sequential exposures to concentrations in different media and across multiple pathways, and then applies statistical methods to give us an idea of how these exposures might look across a whole population.”

The story of how chemicals enter the human body doesn’t end there, however. The exposure estimates that SHEDS generates are now being used as inputs for another kind of model – a physiologically based pharmacokinetic (or PBPK) model, which predicts how chemicals move through and concentrate in human tissues and body fluids.

Using PBPK models, scientists can take the estimates of chemical exposures across multiple pathways generated by SHEDS and examine how these will impact organs and tissues in the body, and determine how long they will eventually take to be naturally processed and expressed.

Together, these two models provide scientists with a much more accurate picture of the risk certain chemicals pose to human health – a picture they’ve been able to confirm by extensive comparisons against real-world data, such as duplicate diet and biometric data collected by the U.S. Centers for Disease Control and Prevention in the National Health and Nutrition Examination Survey (NHANES), which collects biomarker data from 5,000 people each year. When EPA researchers have compared the SHEDS-PBPK exposure and dose estimates with the physical NHANES data, they’ve found that the model’s predictions line up very closely with the observations in the survey.

“The real-world grounding gives you a lot of confidence in the exposure routes modeled in SHEDS and PBPK,” said Rogelio Tornero-Velez, an EPA scientist who has helped develop the Agency’s PBPK models used in the study.

SHEDS has already been used in developing EPA’s regulatory guidance on organophosphate and carbamate pesticides, and chromated copper arsenate, a chemical wood preservative once used on children’s playground equipment. Now, EPA researchers are using the coupled SHEDS-PBPK models to examine a relatively new class of chemical pesticides called pyrethroids to determine whether they pose any risk to human health and the environment.

EPA scientists are continuing to refine the SHEDS and PBPK models used in these studies, adding functions and testing them against real-world data. For policy makers, these models will serve as invaluable tools in making decisions meant to protect human health and the environment from the risk of exposure to harmful chemicals.

“The science and software behind SHEDS and PBPK are substantial,” said David Miller, who has worked on the project from EPA’s regulatory perspective. “They provide exposure and risk assessors both within and outside EPA with a physically-based, probabilistic human exposure model for multimedia, multi-pathway chemicals that is in many ways far superior to those that are presently in routine use.”

Learn More

- The Future of Toxicity Testing is Here

EPA Tox21 partnership taps high-tech robots to advance toxicity testing.

Today there are around 80,000 chemicals that exist in food and consumer products, and 1,500 new synthetic chemicals are introduced into the marketplace every year. Most of these chemicals, both new and old, have not been tested for toxicological information, presenting a serious challenge to those tasked with protecting human health from potentially harmful chemical exposures.

EPA researchers are working to change that. They and their partners are advancing the scientific basis for hazard identification and risk assessment by helping usher in innovative new fields of study and by tapping powerful modern technologies such as super computers and other emerging technologies—including robots.As part of the Agency’s Computational Toxicology Research Program (CompTox), EPA has partnered with the National Institute of Environmental Health Sciences (NIEHS) National Toxicology Program, the National Institute of Health (NIH) Chemical Genomics Center, and the Food and Drug Administration (FDA) in a collaboration known as Tox21. By combining their collective expertise and pooling existing resources—including research results, funding, and testing tools—Tox21 aims to revolutionize chemical toxicity test methods by advancing high throughput, mechanism-based test methods. The partnership is ushering in the future of toxicity testing.

That’s where the robots come in.

The collaboration recently unveiled a high speed screening system that tests chemicals for harmful human health effects. The mechanized system greatly reduces the need for lab animals in toxicity testing, working instead with 3-by-5-inch test plates that are moved precisely through a series of steps by giant yellow, constantly moving robot arms. Each plate contains 1536 small wells that can hold various living animal cells—typically skin, liver, or brain cells of rats or humans—and a sample of a particular chemical.“Each one of those wells is filled with a single chemical sample that we use to expose the cells to, so in essence we have 1536 chemical toxicity tests on a single plate,” explains Dr. Robert Kavlock, Director of EPA’s National Center for Computational Toxicology. The robot uses a pin tool, which has corresponding pins for each well, in order to dispense a drop or two of the chemical being tested. The robot’s arm then puts the tray in a digital imaging device that scans the sample for changes in the cell that could mean biological activity.

The robot receives software instructions on what types of biological activity to “look for” in the exposed cells. Positive results are flagged, triggering an alert that the computer sends to project scientists’ computers outside the 20-by-20 foot robot lab.

Scientists interpret the results so they can then identify chemicals that warrant further screening or study. Just because a chemical shows a reaction with an isolated cell (skin, liver, etc.) does not necessarily mean that it will have the same effect when interacting with a living person. To address this, Dr. Kavlock’s team at EPA is developing algorithms that predict whether a person’s organs and body as a whole will react to chemical exposure the same way their individual cells did in the preliminary tests.

Tox21 is revolutionizing the way chemical testing is done. Traditional toxicity testing is built on animal-based studies that require relatively large investments in time and resources, including money. In the past, EPA has had to test one chemical at a time, completing only a couple dozen assessments a year: “A single human researcher may work on ten chemicals a year, or 20," says Kavlock. "We are doing 10,000 in a week.”Tox21’s new robot system significantly reduces the cost and duration of chemical testing, which will allow EPA to better identify potentially harmful chemicals and serve its core mission to safeguard human health and the environment. It also allows the FDA to better analyze unexpected toxicity, opening the door for better drug development.

The partnership built around Tox21 and the high-tech robot testing is also an exemplary government partnership. "You read a lot in the papers about the duplications of government," Kavlock explained in a recent New York Times article Exitabout the project. "This is the case where there's un-duplication. We are really bringing—between NIEHS, NIH Genomics Center and EPA—a really significant complementary expertise."

Tox21’s robot system is now testing chemicals found in industrial and consumer products, food additives and drugs, for evidence they might lead to adverse health effects. The end goal is to create a comprehensive database detailing tested chemicals with particular emphasis on toxic and harmful ones. Thanks to the Tox21 collaboration and its new robot technology, the future of toxicity testing—and the protection of human health—is now.

Learn More

- Supporting Decisions By the Pie Slice: EPA Researcher Serves Up Data

An EPA statistician is developing innovative ways to translate vast quantities of toxicological data into an accessible, visual format.

A picture paints a thousand words. Knowledge is power but too much information can overwhelm. An expert makes decisions easier by knowing what to pay attention to and what to ignore.Exit These sayings apply to the work of David Reif, Ph.D., an EPA statistician and geneticist who is developing innovative ways of harnessing huge amounts of information on toxic chemicals to better protect human health and the environment.

Reif has developed a new tool, called the Toxicological Priority Index (ToxPi), which profiles the interactions of chemicals with biological processes in ways the public and decision makers can easily understand. This is especially important because modern scientific methods are generating such a deluge of data that scientists say it can be overwhelming.

Using simple “pie slices” and other visual graphics, Reif can succinctly convey extensive scientific information about how some chemicals interact with the endocrine system, the body’s systems of glands and hormones. EPA is concerned with effects on the endocrine system because it regulates critical bodily functions such as growth, metabolism, and sexual development. When these functions are disrupted, the effects can lead to serious health problems, including infertility, birth defects and cancer, sometimes years after exposure.

ToxPi helps EPA officials and others to better understand environmental chemicals, prioritize those that warrant further testing, and can act as a check on existing chemical test results. The tool was developed as part of EPA’s ToxCast™ program, which takes a broad perspective in evaluating chemicals, and maps their “toxicological signatures” to specific biochemical pathways in the body.

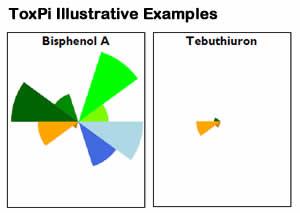

“ToxPi presents information in such a way that experts and the public alike can ‘look under the hood’ as we make all our data available,” Reif says. The larger and more numerous the “slices of pie” in ToxPi figures, the more potentially negative health impacts they may have (See Figure 2 below on the chemicals Bisphenol A and Tebuthiuron).

Above illustrates ToxPi "slices" for two chemicals: Bisphenol A (BPA), which is used in plastics, and Tebuthiuron, a herbicide. Different colors show results from various types of vitro assays, or tests, conducted to measure endocrine activity caused by the chemical. The larger green "slice" pointing to 2 o’clock for BPA, for example, illustrates that it produced a more potent reaction in estrogen receptor assays as compared to Tebuthiuron. BPA also ranks above Tebuthiuron in all other ToxPi slices.

Above illustrates ToxPi "slices" for two chemicals: Bisphenol A (BPA), which is used in plastics, and Tebuthiuron, a herbicide. Different colors show results from various types of vitro assays, or tests, conducted to measure endocrine activity caused by the chemical. The larger green "slice" pointing to 2 o’clock for BPA, for example, illustrates that it produced a more potent reaction in estrogen receptor assays as compared to Tebuthiuron. BPA also ranks above Tebuthiuron in all other ToxPi slices.The impetus for Reif’s development of decision-support tools, such as ToxPi, was new pesticide and drinking water laws mandating unique chemical testing regimens. These laws call on EPA to develop a screening program to assess the potential for chemicals to interact with the endocrine system. Because of their potential to disrupt still developing systems, endocrine disrupting chemicals are a high priority at EPA as it works to protect babies, infants, and others in the early life stages. Reif works with an interdisciplinary team that has already applied ToxPi to 309 chemicals, including 52 singled out for endocrine screening.

The tool provides a science-based and efficient way to prioritize chemicals for the new endocrine screening program. The screening prioritization process is supported by ToxPi’s impartial method for selecting chemicals for testing. ToxPi both adds to the reliability of information available for test chemical ranking and makes it understandable. The colored graphs generated by the tool illustrate “why we’re selecting this chemical for further testing,” Reif explains.

ToxPi also saves costs by avoiding redundant testing and reduces the number of test animals required for evaluating chemicals. In addition, it focuses research on the impacts of greatest concern. Reif also says the tool is transparent and flexible in that new information can always be integrated into a chemical’s profile as it is collected.

Other unique patterns of biochemical impacts can be illustrated by the tool beyond the endocrine system. Some EPA researchers are applying it to the growth of embryos to understand developmental toxicity and others are applying it to the human liver. In upcoming rounds of research, EPA plans to scale up the effort from around 300 to more than a thousand chemicals and will include exposure information, increasing the tool’s reach and utility.

For Reif, representing vast amounts of knowledge in visual ways can lead to powerful new insights that allow decision makers, researchers and the public to broaden their understanding, refine their priorities and be familiar with the science base needed for more effective environmental research and action. “Working with my ToxCast™ colleagues exposes me to multiple perspectives and disciplines. ToxPi allows us to bring these perspectives together in unique and accurate ways,” says Reif.

Learn More

- Wildfire Research Confirms Health Hazards of Peat Fire Smoke

An EPA-led study finds associations between peat wildfire smoke and increased emergency room visits.

It may be tempting to breathe a sigh of relief if a rampant wildfire strays wide of your home. But don't breathe too deep; even if you can avoid the flames, you are not out of harm's way.

A research paper recently published by EPA shows that peat wildfire smoke can increase risk of serious respiratory and cardiovascular effects. This paper is the first to show an association between wildfire smoke and increased emergency room visits for symptoms of heart failure.

Peat Exit is partially decomposed vegetable matter commonly found in wetland areas. Peat wildfires can smolder in the ground for months and are notoriously difficult to extinguish.

"A peat fire differs significantly from a forest fire or grassland fire where the fuel is timber or grasses," says Wayne Cascio, director of EPA's Environmental Public Health Division. "Peat fires burn at lower temperature, produce more smoke, and generate chemicals that are more irritating to the eyes and airways."

On June 1, 2008 a lightning strike in coastal North Carolina (NC) sparked a prolific wildfire. This smoldering conflagration burned for three months before it was considered 90% contained. Having scorched over 40,000 acres of land, it was not until January 5, 2009 that the peat wildfire was officially declared "out" according to the U.S. Fish and Wildlife Service, which manages Pocosin Lakes National Wildlife Refuge, where 60% of the fire's devastation occurred.

More threatening than the physical destruction of the lingering fire was the enormous smoke plume that hovered over much of eastern NC. "The lower temperature of the peat fire produces plumes of smoke that remain close to the ground and severely affect nearby communities," says Cascio.

Consequently, EPA researchers led a study examining the health risks associated with peat wildfire smoke from the 2008 fire. Using satellite imaging, researchers were able to identify counties most severely impacted by the peat fire's smoke pollution. Researchers then collected emergency room (ER) records from those smoke-affected counties for dates of highest smoke exposure and for dates of little or no smoke exposure.Data were also collected from neighboring counties that experienced much less smoke pollution over the same period of time. By comparing the ER records of smoke-affected days to those of smoke-free days, scientists found a marked increase in respiratory and cardiovascular ER visits in the heavily smoke-affected counties.

Research statistics show that in smoke affected counties, ER visits during heavy smoke exposure days relative to smokeless days increased by:

- 73% for Chronic Obstructive Pulmonary Disease (COPD)

- 65% for asthma

- 59% for pneumonia and bronchitis

- 37% for symptoms of heart failure

A paper describing the results of this study was published June 27, 2011 by Environmental Health Perspectives.

As a mix of decomposing plant matter and soil, burning peat emits different chemicals than burning trees or grass, and the health effects of those chemicals have been poorly understood. This is the first study of a naturally occurring peat fire in the U.S. and the first known study to show that exposure to a peat fire can cause both respiratory and cardiovascular effects.

"One finding unique to this study is the observation that ER visits for heart failure increased," Cascio points out. "Previous wildfires have either shown no effect or a very weak positive effect, so our observation that ER visits for heart failure increased by 37% was surprising."

The study results also suggest that certain groups of people – aged adults and those with pre-existing lung and heart problems – are more susceptible to the adverse affects of peat wildfire smoke.

The publication of this research comes at a particularly relevant time as peat wildfires have been ravaging coastal North Carolina since early May, 2011. Scientists hope that this research will be useful in guiding public health officials and the local population to be aware of their local air quality and to take appropriate actions to safeguard their health.

"The public is rightfully concerned about the potential for adverse health effects of the current peat fire in eastern NC," says Cascio. "This study can provide a context to educate the public on the actual risk to the population and what steps can and should be taken to lessen the health risk."

The significant findings of this research may lead to further research into modeling weather and wind patterns to predict the location of a wildfire smoke plume in advance of extreme smoke exposure. With this type of technology, susceptible populations would be forewarned of hazardous air conditions and could take action to limit exposure and decrease adverse health impacts.

Learn More

- The Science Behind Human Health: EPA’s Integrated Risk and Information System

The IRIS program strengthens the scientific foundation that protects health and the environment.

It’s a fairly safe bet that at some point, we’ve all taken a drink of tap water from a kitchen faucet, inhaled deeply while enjoying an afternoon outside, or tracked soil into our homes. Americans perform these and dozens of other mundane daily activities without giving a second thought to potential harmful consequences, thanks in large part to the U.S. EPA’s Integrated Risk Information System (IRIS).

EPA plays a critical role in providing high-quality health information on chemicals of concern. The Agency’s IRIS assessment program is a key part of this effort and includes human health assessments of more than 540 chemical substances. These assessments provide the sound scientific basis for EPA decisions and are widely used by risk assessors, health professionals, and state, local, and international governments.

“EPA is committed to upholding the highest standard of scientific integrity in all of its activities,” said Paul Anastas, assistant administrator of EPA’s Office of Research and Development. “This means constantly seeking to improve, strengthen, and enhance our scientific work to reflect the best available information.” Continuous improvement of the IRIS program is an important part of this ongoing effort.

In early July, EPA announced changes to the program that will help ensure that the Agency continues to use the best and most transparent science to pursue our mission of protecting human health and the environment. These latest changes build upon significant improvements initiated by Administrator Lisa Jackson in 2009.

Since 2009, EPA has completed 16 assessments, more than the number of assessments that were completed in the previous 4 years. The average timeframe for completing assessments has been reduced from three years or more to within two years and the backlog of assessments in the pipeline is smaller. These improvements have been accompanied by strong and continued emphasis on independent, transparent peer review of the IRIS program.

In April of this year, EPA received a report from the National Academy of Sciences on their review of EPA’s draft IRIS assessment of formaldehyde. In the report, the NAS suggested ways to improve the IRIS process in two primary areas: accessibility and transparency.

Because EPA is constantly seeking feedback from credible, independent scientific sources, those suggestions were welcomed and are in the process of being fully incorporated into the IRIS program. They include:

- Shorter, clearer, more concise and more transparent IRIS assessment documents.

- Reduced volume of text and increased clarity and transparency of data, methods and decision criteria.

- Consolidation of text into concise narrative descriptions.

- Online public posting of all studies used in the assessment development.

In order to make the scientific rationale of IRIS assessments as transparent as possible, EPA will evaluate the strengths and weaknesses of critical studies in a more uniform way. And, the Agency will clearly indicate which criteria were most influential in weighing scientific evidence in support of chosen toxicity values.

EPA will also establish a dedicated advisory committee that will exclusively serve to help assure the scientific rigor and transparency of IRIS assessments as the NAS recommendations are implemented. And, EPA will add a peer consultation step to the early stages of major IRIS assessments to assure that the scientific community can provide input as critical design decisions for individual assessments are made.

These changes will be implemented over the coming months in a tiered approach—with the most extensive changes applied to those assessments in the earliest stages of development.“All the improvements to IRIS are part of the natural evolution that accompanies all rigorous scientific work,” said Anastas. “We will continue to consider information and perspectives from independent scientific sources and pursue improvements on an ongoing basis.”

Learn More